Philosophy and AI

see AI and Philosophy and Religion

“AI is applied philosophy”: so said my Stanford professors back when they were creating what came to be the popular “Symbolic Systems” major. Trying to understand the implications of this new breed of computers requires pulling across disciplines from neuroscience to math to psychology and more – perfect for the back-to-fundamentals questions that philosophers have asked for thousands of years.

see also Can Machines Think

Stephen Wolfram

In What is ChatGPT Doing and How Does it Work?:

And in the end there’s just a fundamental tension between learnability and computational irreducibility. Learning involves in effect compressing data by leveraging regularities. But computational irreducibility implies that ultimately there’s a limit to what regularities there may be.

“language is at a fundamental level somehow simpler than it seems”

but my strong suspicion is that the success of ChatGPT implicitly reveals an important “scientific” fact: that there’s actually a lot more structure and simplicity to meaningful human language than we ever knew—and that in the end there may be even fairly simple rules that describe how such language can be put together.

He uses an example of a “balanced parenthesis” language and show how training on 10 million examples or so is enough to reach the competence of humans, who can ‘eyeball’ a list of parentheses to tell if they’re balanced. This shows that human languages have a syntactic constraint that limits the types of token strings they’ll accept.

But importantly, note that ChatGPT is also heading toward a semantically meaningful constraint as well: it’s very good at generating only strings that make sense, i.e. that somehow cohere to whatever humans might accept. Syllogistic logic is one such constraint: sentences that “make sense” must be internally consistent.

Although he doesn’t conclude with a generalized theory of meaningfulness, he points to computational languages as proof that it is possible to establish precise constraints – i.e. what can be computed – on what might otherwise be an intractable problem.

July 2023 see his Generative AI Space and the Mental Imagery of Alien Minds

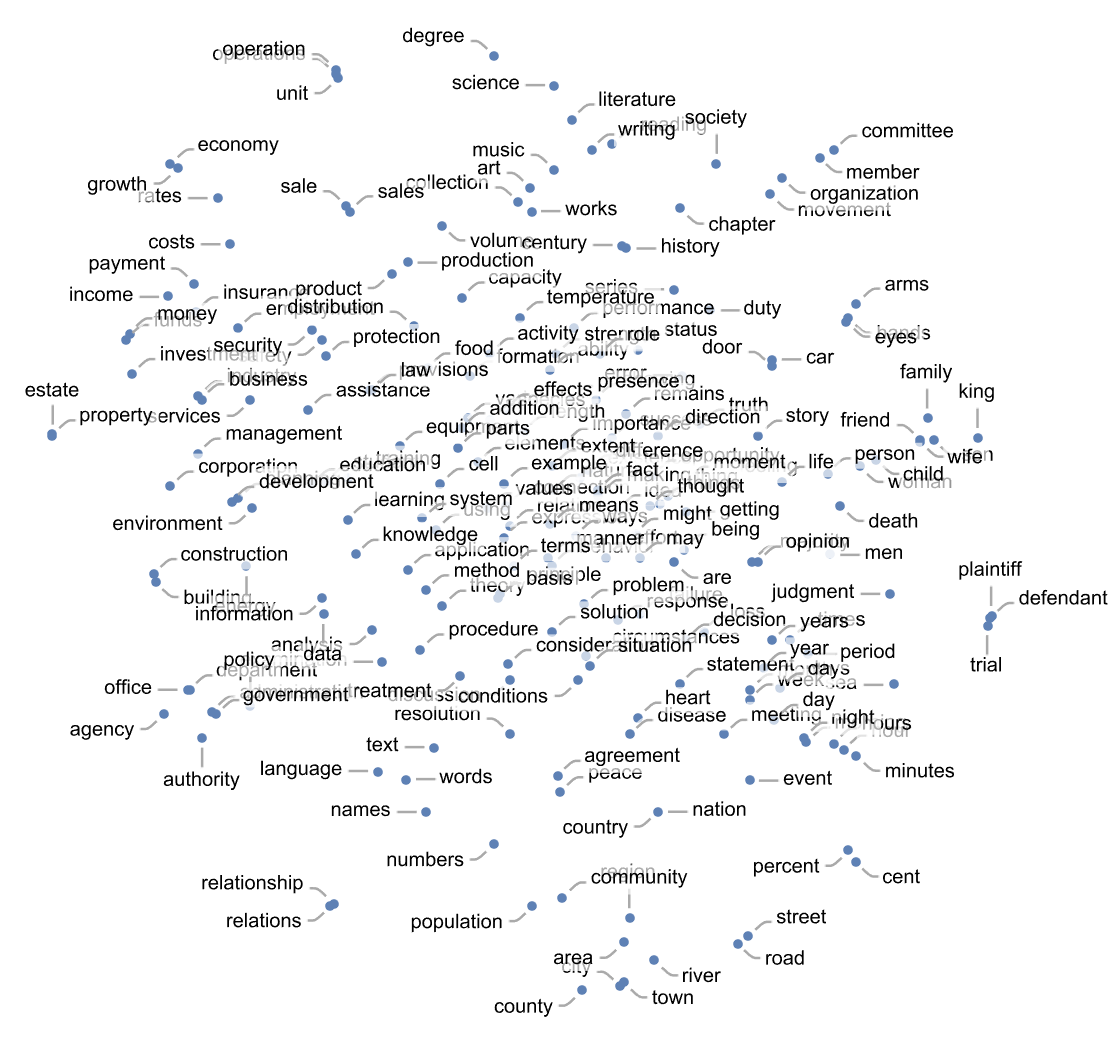

François Chollet describes How I think about LLM Prompt Engineering as a search, not just through word vectors but also through vector programs that correspond to more than simple relationships between words. Word2Vec is a 2013-era technology that allows for “arithmetic on words”, with operations like male_to_female(king) -> queen. Transformers add the concept of attention to let these arithmetic operations scale to handle entire sections of text that point to the transformations themselves. Now the search space includes “vector programs” like write_in_the_style_of_shakespeare(my_input) and everything in between.

LLMs and Language

On Stochastic Libraries: Large Language Models as library-like Knowledge-Synthesizers. by René Walter

These algorithmic machines that are currently emerging are not intelligent, but they provide access to synthetic recombinations of human knowledge, reminiscent of a new type of library whose combined content is navigated by text input. This new form of library doesn’t provide access to individual human works, but rather continuations, amalgams, remixes, modulations, and interpolations between nodes in a network of existing knowledge, “in the style of Banksy, trending on artstation.”

Jaron Lanier thinks1, instead of the misleading term “artificial intelligence”, we should talk about “an innovative form of social collaboration”.

He adds that the future should include a “Mediator of Individual Data (MID)” or data trust, where people pool their content somehow so they can receive some reward when it’s reused elsewhere. This “data dignity” is supposed to let the little guy have more say in how his data is used, but I think Lanier is ignoring the reason the little guy has no say right now: a single individual contributes very little, in the grand scheme of things. It’s only by aggregating lots of tiny contributions that LLMs or other technologies become valuable.

Talking About Large Language Models Shanahan (ICL): Jan 2023

a great many tasks that demand intelligence in humans can be reduced to next token prediction with a sufficiently performant model.

A bare-bones LLM doesn’t “really” know anything because all it does, at a fundamental level, is sequence prediction.

Language is an aspect of human collective behavior

LLMs don’t have what Daniel Dennett calls intentional stance, i.e. an assumption that an interlocutor has beliefs, desires, or intentions.

Be careful to distinguish between knowing that certain words follow each other and knowing a concept.

What if we had a model that used real-time visual feedback from the world in order to update itself. Could such a thing be meaningful described as “knowing”?

The author’s answer is “not really”, because the visual feedback – while important – is just one narrow part of the world. The system gets correlational feedback about that image, sure, but not about how that image fits into the wider world – a causal association that humans take for granted.

Chomsky on AI

2024-08-19 6:15 AM

Kallini et al. (2024)

via X @pascalefung

Researchers built a corpus of “impossible languages”, made of strings that by design don’t resemble the syntax of any human language. Contra Chomsky, training this on a GPT-2 model has higher perplexity (i.e. it’s more inefficient) than training on a human language.

The results show that GPT-2 models struggle to learn the impossible languages as well as the natural language. The models have higher perplexities and are less surprised by ungrammatical constructions in the impossible languages compared to the control language.

2023-01-26 5:32 PM

Also see Chomsky and Moro on the Limits of Our Comprehension, an excerpt from Chomsky and Moro’s 2022 book “The Secrets of Words.”

“It is important to learn to be surprised by simple facts”

Like the way it is impossible to teach a rat to follow a prime number maze, there may be concepts beyond the ability of human minds to comprehend.

Chomsky wrote a New York Times Op Ed (Mar 2023) Noam Chomsky: The False Promise of ChatGPT in which he gives many classic examples showing how the LLM doesn’t really understand anything.

Note, for all the seemingly sophisticated thought and language, the moral indifference born of unintelligence. Here, ChatGPT exhibits something like the banality of evil: plagiarism and apathy and obviation. It summarizes the standard arguments in the literature by a kind of super-autocomplete, refuses to take a stand on anything, pleads not merely ignorance but lack of intelligence and ultimately offers a “just following orders” defense, shifting responsibility to its creators.

Gary Marcus on Chomsky

In a 2012 New Yorker piece, Marcus gives some anecdotes about Chomsky:

This conception of ‘renouncing beliefs’ is very odd, as if we’re in some kind of religious cult. I ‘renounce beliefs’ practically every time I think about the topics or find out what someone else is thinking.”

In Marcus’ Substack, explains that Chomsky doesn’t like GPT-3 because it’s not real science. It doesn’t explain why it does what it does. It has little explanatory value.

One clue is how GPT-3 is no better at human languages than it is at computer languages. It’s all just pattern recognition.

even in an immense neural network, with hundreds of billions of parameters, performance on simple 3-digit math problems topped out at 80%.

Ask it why it’s good to eat socks after meditation.

the latest and greatest, InstructGPT, was recently asked to explain why it is good to eat socks after meditation and blithely invoked fictitious authorities, alleging that “Some experts believe that the act of eating a sock helps the brain to come out of its altered state as a result of meditation.”

also Freddie DeBoer: AI, Ozymandias:

The human mind is not “a lumbering statistical engine for pattern matching, gorging on hundreds of terabytes of data and extrapolating the most likely conversational response or most probable answer to a scientific question,” as Chomsky, Ian Roberts, and Jeffrey Watumull argued earlier this year. The mind is rule-bound, and those rules are present before we are old enough to have assembled a great amount of data. Indeed, this observation, “the poverty of the stimulus” – that the information a young child has been exposed to cannot explain that child’s cognitive capabilities – is one of the foundational tenets of modern linguistics.

AI is Becoming a Natural Science

Former AAAI President Subbarao Kambhampati thinks AI is becoming an “ersatz natural science” more concerned with explaining an empirical phenomenon than with describing the underlying rules.

A dear colleague of mine used to preen that he rates papers—including his own—by the ratio of theorems to definitions

Remember that Herb Simon used to refer to “sciences of the artificial”.

Also see the work of Stanford’s Pat Langley, who spent much of his career trying to build machines that can do science.

Counterargument

Sam Hammond articulates the counter-argument in We’re all Wittgensteinians now: The philosophical winners from LLMs (evernote)

My view is thus the exact opposite of Noam Chomsky’s, who argues that the success of Large Language Models is of limited scientific or philosophical import, since such models ultimately reduce to giant inscrutable matrices. On the contrary, the discovery that giant inscrutable matrices can, under the right circumstances, do many things that otherwise require a biological brain is itself a striking empirical datum — one Chomsky chooses to simply dismiss a priori.

Other

Do models use English as their internal language? Wendler et al. (2024) Paper says it is more that they think in concepts, but that those concepts are biased towards English, so yes they think in English but only in a semantic sense.

AI can be an accessory in the death of traditional languages, or a tool for preserving them.

References

Footnotes

The New Yorker April 2023↩︎